If you’re a marketer, chances are that you work in a CMS every day. You publish pages, blog posts, embed images and videos, add categories, connect profiles, and integrate software, but have you ever wondered what the days before CMSs were like?

Back in the 1990s, websites were static pages built on simple HTML text files that existed within a directory under an FTP web server. Years later, after Internet Explorer started supporting CSS in 1996, websites became more interactive and dynamic.

However, building, uploading, and maintaining websites became increasingly difficult and the time was ripe for the emergence of content management systems for automating and streamlining the process.

The early to mid-2000s witnessed an increasing professionalization of CMSs and they started to help people handle not only content but also business operations, intranets, and archives.

From their inception, CMSs have been in the service of marketers, but not every CMS is created equal.

Enter the headless CMS.

Why headless CMSs are a must for marketers in 2020

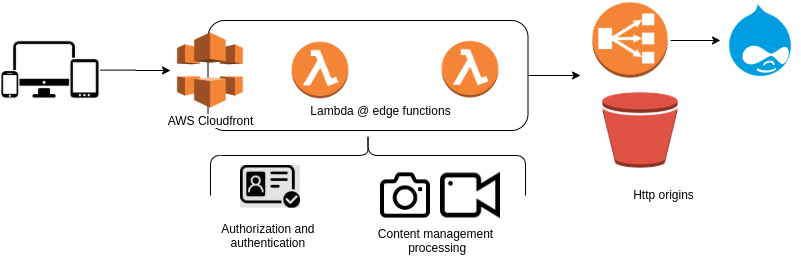

Headless CMS removes the front-end delivery layer from the process altogether leaving only the backend, which works as a content repository.

A headless CMS separates managing content from presenting formatted content, removing the interdependency of presentation and behavior layers.

Headless CMSs are also API-first. That means they integrate content management tools via API. The headless architecture separates formatting from content and allows you to publish content to any device or channel.

These are some of the features of a headless CMS that can help marketers:

Publish non-web content

Non-web content is information and sensory experience that’s communicated to the user using the software. It includes code or markup that defines its structure, presentation, and interactions.

A headless CMS can help you publish non-web content in both documents and software, helping you build SPAs, for example.

Publishing Content to Multiple Channels

While traditional CMSs can also publish to different channels, headless CMSs create content and push it through multiple channels using the ‘create once, publish everywhere’ strategy.

You can publish content across devices with a coupled CMS, sure, but it might require some tweaking. With a headless CMS, the multichannel approach is not something you have to think of; it’s already built into the design.

Which means it helps marketers publish content faster and easier. All they have to do is create the content and push it everywhere.

Aggregating Content

In its simplest terms, aggregation means sourcing, normalizing, and making content available for consumers. Content aggregation is one of the things a headless CMS does best, especially when it comes to aggregating content at the point of consumption like in the browser or app.

Headless CMSs are particularly good at aggregating content because they have a better-defined content structure, which helps you map other source content easily.

Secondary Content Management

Since headless CMSs are, in essence, a database of content with an API to deliver that content to whatever channel or platform you aim at using, you can use it as a secondary system to store and access to different assets faster using an API.

Also, you can use a headless CMS to integrate with other services your coupled CMS can’t, giving you another layer of flexibility to face your user’s needs.

10 Headless CMSs (That Marketers Won’t Hate)

As Headless architecture represents the advanced development in the industry, we decided to review 10 headless CMS. To maintain credibility and neutrality, we analyzed the reviews from the popular website g2.com about these companies. The objective is to understand how they are perceived and assessed by clients across the globe.

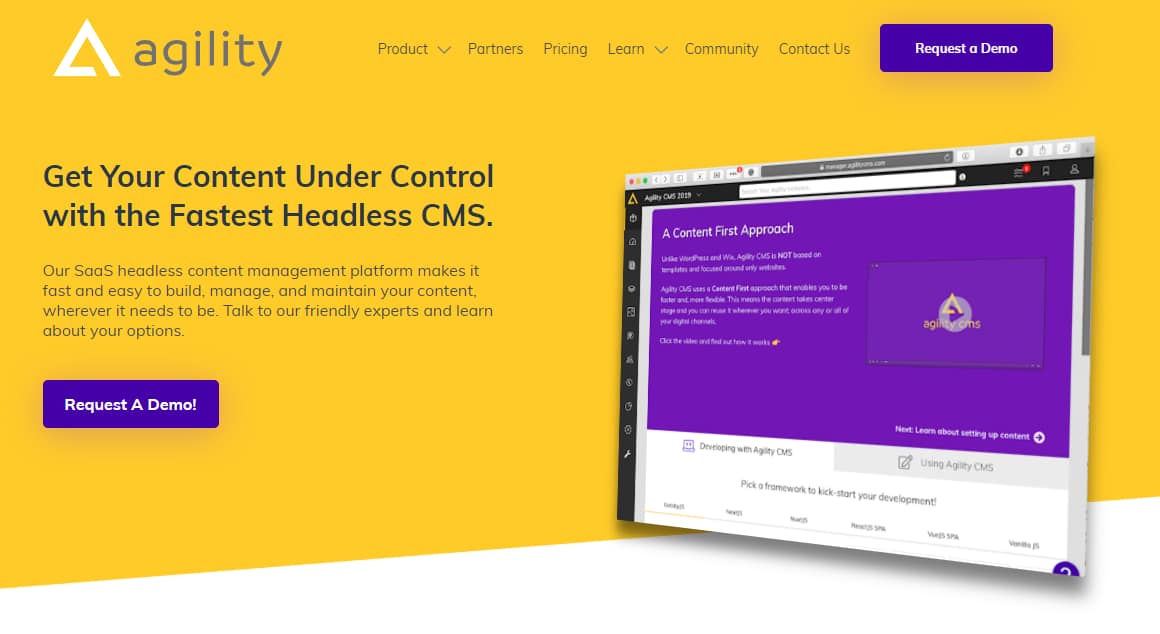

1. Agility CMS

Agility can help you be faster across all your digital channels. The CMS enables you to manage them all from one central platform and empowers your team with unlimited flexibility and scalability.

How Can Marketers Benefit From Agility CMS?

According to the reviews, Agility CMS enables teams to streamline communication and solve problems quickly and as they present themselves. Agility CMS is the fastest Headless CMS with Page Management and it allows editors to manage not only the content but also the structure of your website. Agility CMS allows developers to define Page Templates and Modules for the marketing team to use.

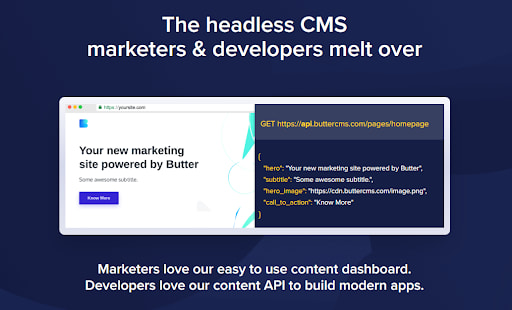

2. Butter CMS

Butter CMS advertises itself as a CMS for marketers and business owners, emphasizing its ease of use and how its features can help you grow organic traffic and conversions in a no-code environment.

How Can Marketers Benefit From Butter CMS?

One of the things reviewers in G2 Crowd highlighted was how Butter CMS was capable of helping them revamp their websites quickly while keeping them relevant and SEO-optimized.

3. Contentstack

Contentstack advertises itself as one of the first headless CMS platforms to take the needs of business owners into account, helping them improve their content management using an intuitive, no-code interface.

How Can Marketers Benefit From Contentstack?

One of the things reviewers at G2 Crowd emphasize the most is how Contentstack has enabled them to generate and produce content at scale even if they have thousands of suppliers across the world.

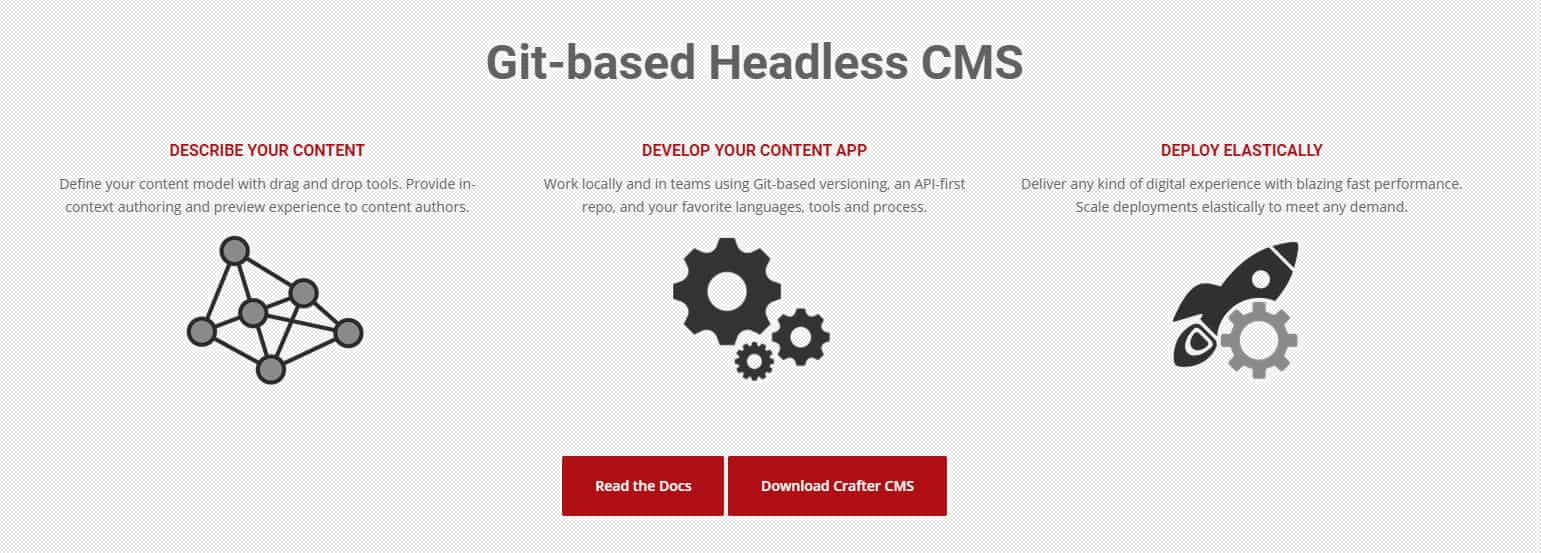

4. Crafter CMS

Crafter CMS is an open-source CMS that enables marketers to build all types of digital experience apps and websites. The CMS is backed by Git and enables developers and marketers to work collaboratively.

How Can Marketers Benefit From Crafter CMS?

According to G2 Crowd, Crafter is best used as a multi-purpose CMS for blogs, content sections, and augmenting products with headless delivery, which makes it a great choice if you’re looking for a robust secondary headless CMS to integrate with your existing solution.

5. dotCMS

dotCMS is an open-source CMS built on Java that enables marketers to make their content authoring more efficient, empowering both marketers and developers with the ability to create and reuse content to build connected, engaging, and memorable products.

How Can Marketers Benefit From dotCMS?

One of the things reviewers emphasized about dotCMS is that the CMS offers strong intranet capabilities that help marketing teams remain connected to the rest of their teams and strengthen team productivity.

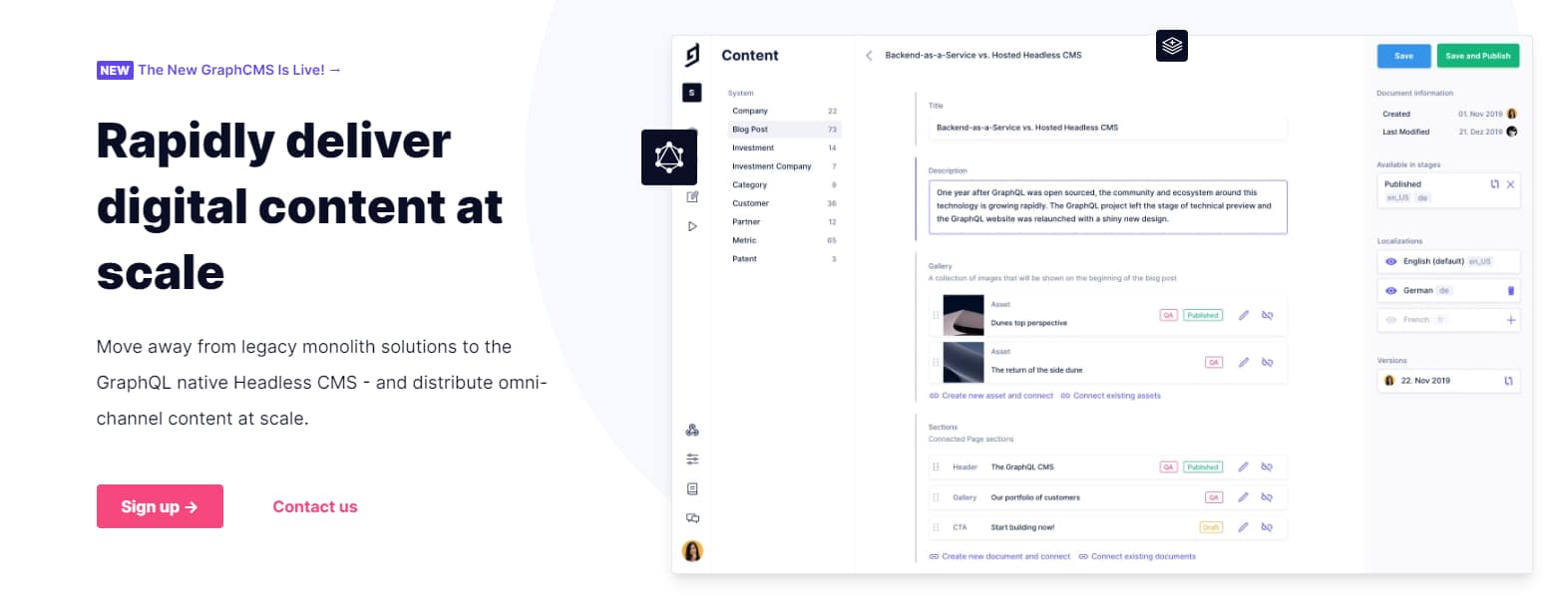

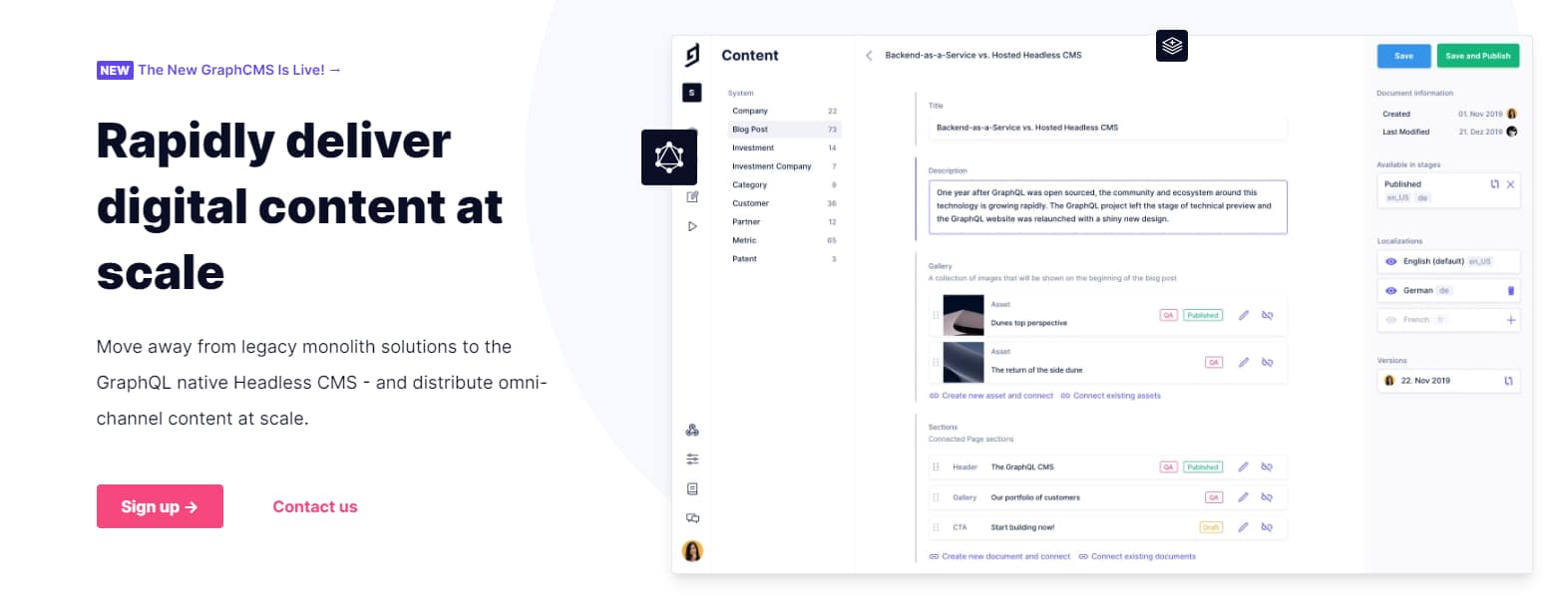

6. GraphCMS

GraphCMS focuses on providing content teams flexibility so you can focus on building better websites in less time.

How Can Marketers Benefit From GraphCMS?

The reviews on G2 Crowd describe Graph as a CMS that enables marketing teams to publish content faster and at scale. Graph’s UI makes it easy for content managers with no coding experience to publish and distribute content.

7. Kentico Kontent

Kentico Kontent advertises itself as a Content-as-a-Service platform that empowers marketers to publish better content, faster. Kontent helps companies whose content is spread across systems keep things consistent.

How Can Marketers Benefit From Kentico Kontent?

In this case, most reviewers in G2 Crowd see Kontent as a robust tool to create and update content as well as making comments and revisions. The tool lends itself well to the content creation process, even for marketers who hire freelance writers to create content for them.

8. Magnolia CMS

Magnolia is a java-based, open-source, enterprise-oriented CMS that enables companies to scale by providing them with flexible integrations and great ease of use.

How Can Marketers Benefit From Magnolia CMS?

According to G2 Crowd reviewers, Magnolia can help marketers by providing them with a centralized platform to deliver digital experiences to their visitors and clients. Magnolia enables marketers to create apps and launch new websites without creating new content, reusing existing assets to create omnichannel experiences.

9. Netlify CMS

Netlify is a single-page React app that enables developers to create custom-styled templates and previews as well as UI widgets, and editor plugins with ease. Netlify enables you to add backends to support Git platform APIS, which makes it easier to scale and understand.

How Can Marketers Benefit From Netlify CMS?

According to the reviews, Netlify CMS is a great starting point for marketers who look for a lightweight, fast CMS that’s not difficult to understand but not so feature-rich either. At the same time, marketers can provide access to clients so they can manage the content themselves, which is something that small business owners might want.

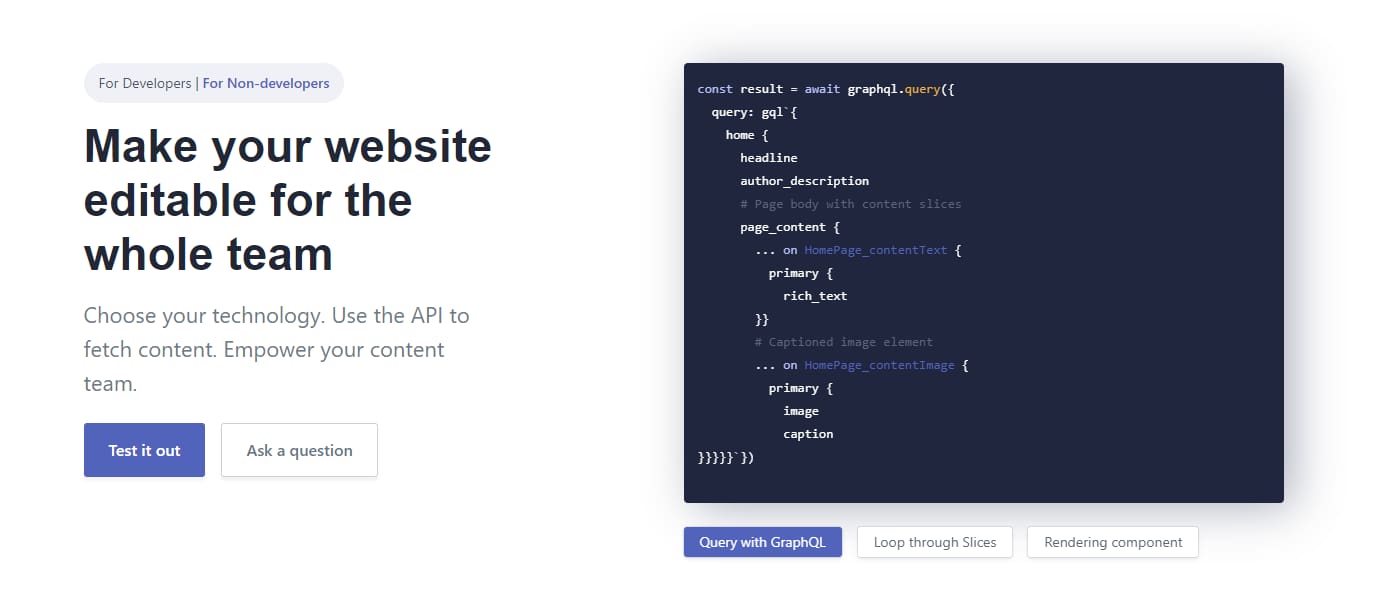

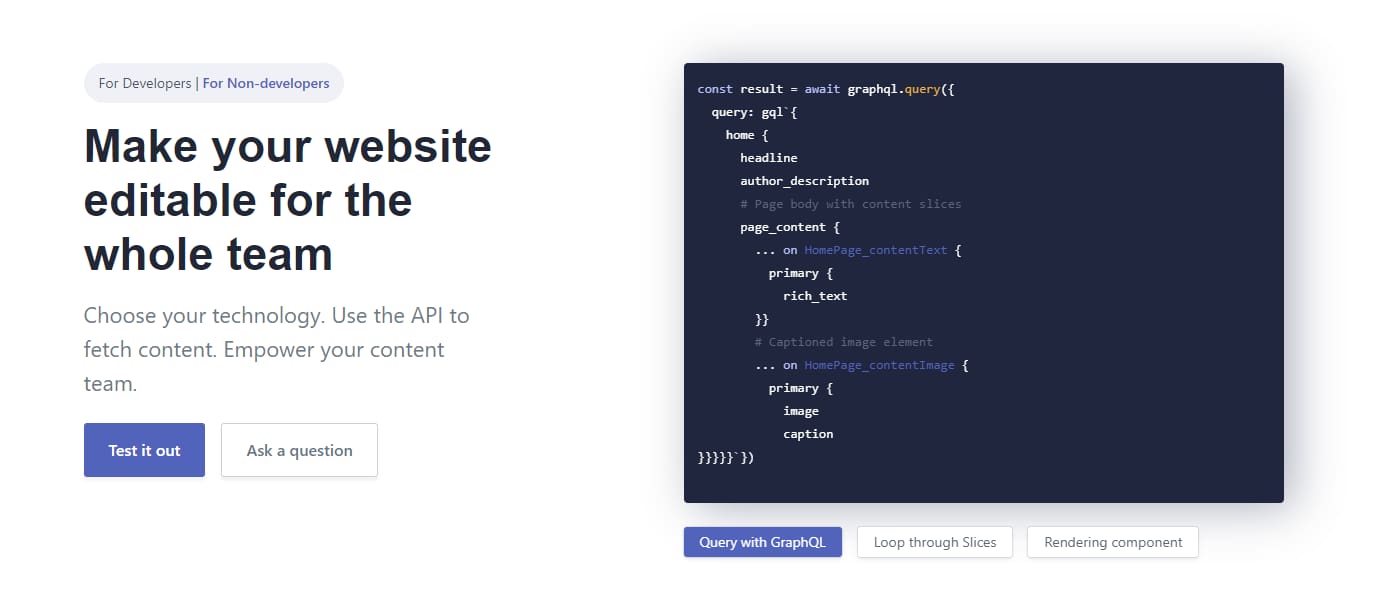

10. Prismic.io

Prismic.io presents itself as a no-nonsense content creation tool that integrates seamlessly with most of the frameworks developers currently use, enabling developers to use the technology they know best so they can focus on producing better results.

How Can Marketers Benefit From Prismic.io?

The fact that Prismic.io is framework agnostic is one of the things that marketers seem to prize the most, according to the G2 Crowd reviews. Prismic.io can be a good choice for marketers looking to test new frameworks and tech stacks quickly and without hindering the content authoring process.

Conclusion

Content marketing is evolving in its scope, features, and capabilities and it has become a crucial aspect of today’s business models to maintain a competitive edge. An advanced CMS can make your business model more agile and innovative. Choosing the most suitable CMS for your business is a vital decision and headless architecture can help marketers to connect with customers through multiple channels and to streamline their efforts.

My logical thinking, along with coding has developed tremendously at Valuebound. I have started looking forward to taking tasks as opportunities rather than challenges. The projects assigned here need to be done within the given timestamp and that has kept me on my toes, but definitely helped me in picking the skill of time management. I have learnt about some useful features and key things associated with Drupal, development and site building, apart from decoupling and CKEditor. All of it has expanded my skills, for sure.

My logical thinking, along with coding has developed tremendously at Valuebound. I have started looking forward to taking tasks as opportunities rather than challenges. The projects assigned here need to be done within the given timestamp and that has kept me on my toes, but definitely helped me in picking the skill of time management. I have learnt about some useful features and key things associated with Drupal, development and site building, apart from decoupling and CKEditor. All of it has expanded my skills, for sure.