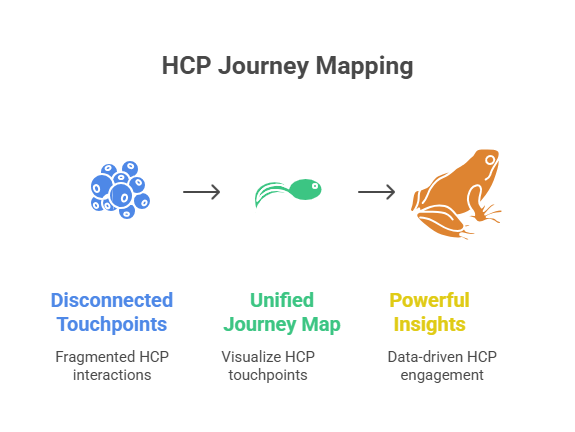

HCP journey mapping is no longer optional. It is the backbone of omnichannel marketing pharma. Without it, campaigns collapse into silos and every rupee spent becomes hard to justify. Indian pharma leaders are discovering this the hard way because the week-on-week content output is going up, but inbound leads are nowhere to be found. Traffic dips, engagement stays flat, and every new tool feels like another disconnected widget.

The shift isn’t local; it’s global. The patient engagement solutions market was valued at US $22.5 billion in 2023 and is expected to grow to US $41.8 billion by 2028, a 13.2 % CAGR. Patient engagement technology as a category is moving from US $17.3 billion in 2022 to US $27.9 billion by 2027 at a 10 % CAGR. Even the niche segment of interactive patient care solutions, like screens in hospital rooms, bedside touchpoints, is growing at a 15.5 % CAGR, nearly doubling from US $146 million in 2022 to US $300 million by 2027. This isn’t trivia. It signals that globally, healthcare engagement is being restructured around journeys, not campaigns. India will not be spared.

If pharma marketing in India continues to run on disconnected touchpoints, it will fall behind not only competitors but also patient expectations and regulatory scrutiny. Doctors expect relevance, consistency, and speed. Regulators demand transparency and compliance. Boards want measurable ROI, not excuses. Only HCP journey mapping ties these three non-negotiables together.

This article unpacks why mapping matters, how it fixes broken engagement models, why compliance becomes easier with it, and how building a unified HCP journey India transforms marketing execution. Most importantly, it shows how your HCP engagement strategy pharma must evolve if you want to win in 2025 and beyond.

Why Pharma Marketing Keeps Missing the Mark

Fragmented Data, Fragmented Doctors

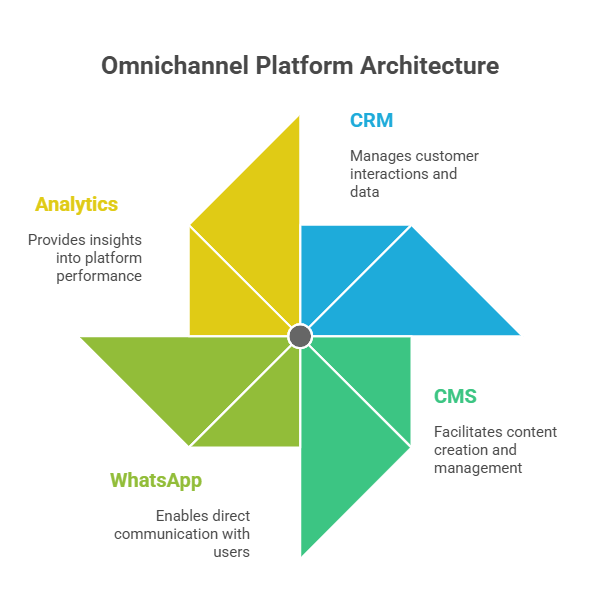

Look inside any large Indian pharma company today. The sales force runs on Veeva or Salesforce. The digital team manages webinars, WhatsApp blasts, and portals. Marketing pushes email campaigns. Compliance works in silos. Each channel collects data, but none speaks to the other. The result: a doctor gets three emails on hypertension, a WhatsApp about an unrelated therapy, and a rep visit with outdated slides. From the doctor’s perspective, the brand feels scattered.

This is the first failure: fragmentation. If you don’t unify, you misfire. Engagement looks busy on your dashboard, but it’s invisible in reality.

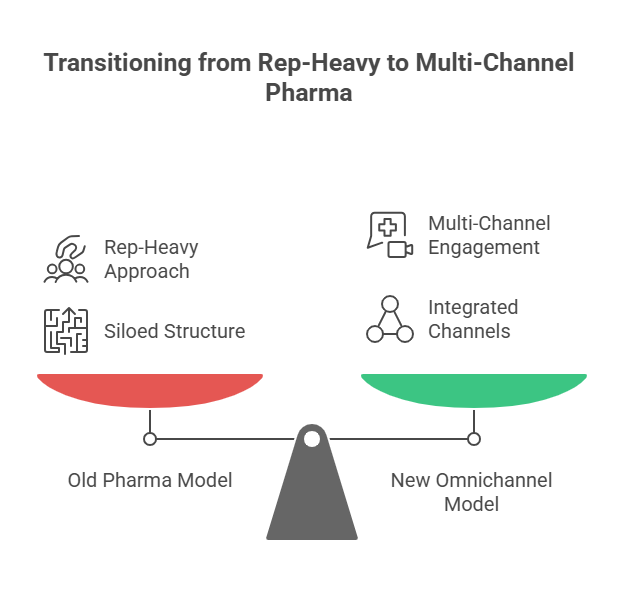

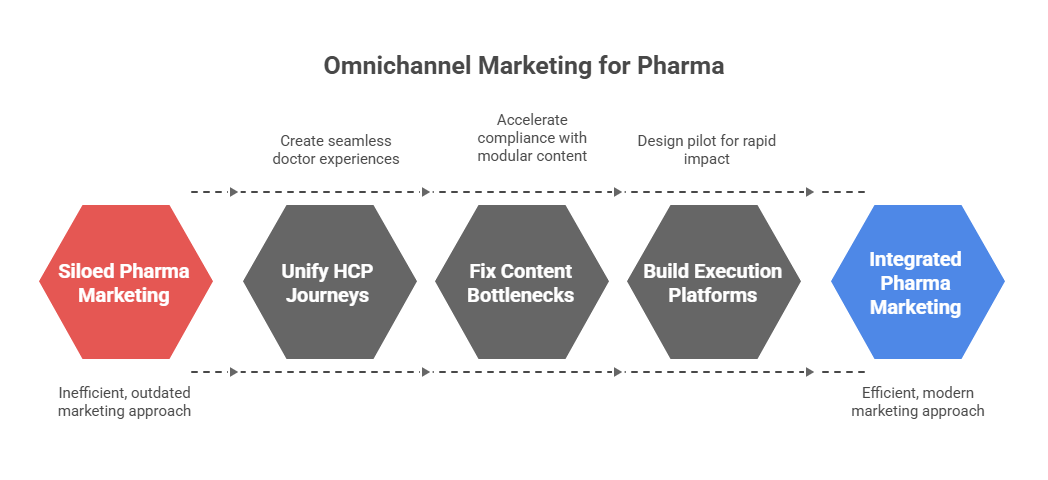

The Myth of Omnichannel Without Integration

Omnichannel has become the most abused word in pharma. Most teams use it to mean “we are on multiple channels.” But that’s not omnichannel. True omnichannel means continuity- the doctor feels the flow. An email about a webinar is linked to a portal download that shapes the next rep conversation. Without mapping, you can’t build this continuity. You’re just stacking channels, not connecting them.

The myth is dangerous because it makes marketing teams complacent. They believe they’re omnichannel because they “cover” many platforms. But if nothing connects, it’s not omnichannel; it’s noise.

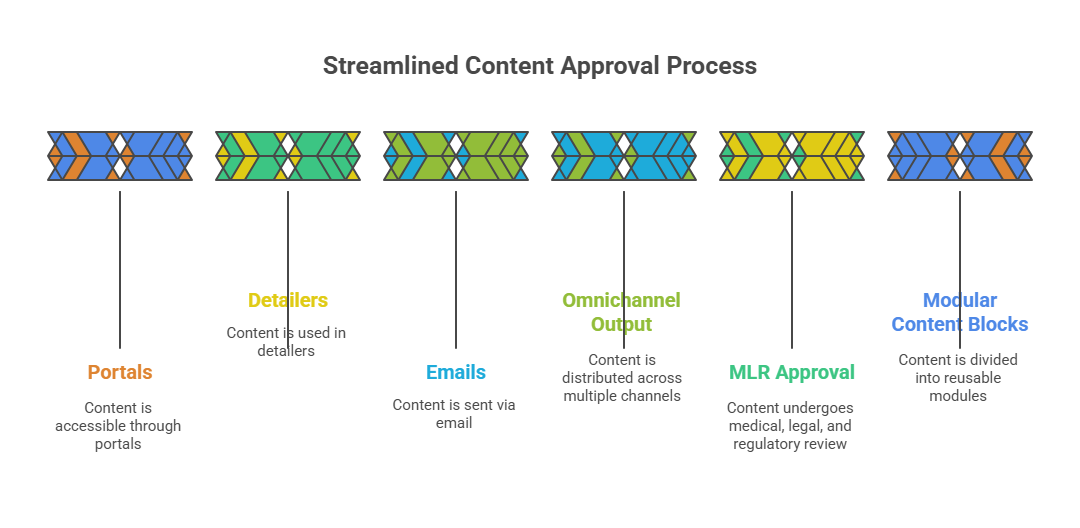

Compliance Slows You Down When It Shouldn’t

Compliance is non-negotiable in pharma marketing. Every piece of content must pass medical, legal, regulatory review. But when compliance sits outside the journey, it slows everything down. Approvals drag. Revisions pile up. Campaigns launch late. Doctors lose interest.

Here’s the irony: when compliance is mapped into the journey itself, it speeds you up. Audit trails are automatic. Disclaimers are pre-tagged. Approvals flow with the campaign, not after it. Journey mapping turns compliance from a bottleneck into a competitive edge.

What HCP Journey Mapping Actually Solves

From Blind Spots to Clear Visibility

Without a map, you are flying blind. You don’t know if a doctor opened your email but ignored the portal. You don’t know if they dropped off after a rep call but reappeared in a webinar. Mapping fills these blind spots. It connects CRM data, digital analytics, and third-party signals into a single view. Suddenly, you’re not guessing. You’re watching the actual journey unfold.

When visibility comes in, conversations change. Instead of debating which channel is “better,” your team asks: “What sequence worked for cardiologists in Mumbai last quarter?” That’s real strategy.

Turning Disparate Channels Into One Story

Doctors don’t think in channels. They think in experiences. If you push ten messages across five platforms, they don’t care how your team is structured. They just feel overwhelmed. Journey mapping solves this by stitching channels into a story.

Imagine: Dr. Sharma gets a WhatsApp nudge about a webinar. She attends. Post-webinar, she downloads a whitepaper from your portal. A week later, a rep follows up with insights tied to her questions. That’s not a coincidence; that’s mapping. Each touchpoint feels connected, not random.

A Foundation for Measuring Real ROI

ROI in pharma marketing is tricky. You can’t always tie engagement to prescriptions directly. But you can measure momentum; whether an HCP is moving forward or stalling. Mapping allows you to score engagement, see progression, and benchmark campaigns.

Instead of vanity metrics like open rates, likes, you measure journey progression: did the doctor move from awareness to evaluation? Did they stay engaged over three months? This is ROI that a board understands.

Making Compliance Work With, Not Against, Engagement

Embedding Rules Into the System, Not the Workflow’s End

The biggest compliance mistake is treating it as a gate at the end. Journey mapping flips this. You design the map with compliance embedded. Content blocks are tagged for permissible use. Each trigger is pre-approved for specific contexts. This means your campaigns are born compliant, not patched later.

Why Auditability Builds Trust Beyond Legal

Regulators demand audit trails. Doctors want reassurance. Boards want safety. Mapping provides it all. Every touchpoint is logged, time-stamped, and linked to the HCP profile. If anyone questions what was shared, you don’t scramble—you show the map.

This builds trust beyond regulators. It tells doctors you respect their boundaries. It tells boards you take compliance seriously. And it tells teams they can move fast without fear.

The UCPMP 2024 Effect on Pharma Marketing Execution

The updated UCPMP code in India tightened rules on doctor engagement. Gifts and favors are gone. Content-led engagement is the only path. But content without a mapped journey looks random and risks duplication.

Journey mapping solves this by aligning all outreach with a compliant framework. You don’t just “push” content; you sequence it responsibly. It’s how you turn UCPMP from a headache into a playbook.

Building a Unified HCP Journey India

The India-Specific Challenge of Tier-2 and Tier-3 Engagement

In metros, HCPs are digital-first. They attend webinars, download studies, respond on LinkedIn. But in Tier-2 and Tier-3 cities, the rules change. Doctors rely on WhatsApp updates, local CME events, and regional portals. If your journey map doesn’t adapt, you’ll miss half your market.

A unified HCP journey India must account for these variations. It cannot treat Jaipur and Mumbai the same. Doctors in Coimbatore expect WhatsApp touchpoints. Doctors in Delhi expect portal-based depth. Mapping unifies both without losing local nuance.

Local Channels, Regional Languages, and Digital Habits

India’s diversity is both strength and complexity. Regional language portals, vernacular WhatsApp messages, city-specific events: these matter more than English-only email blasts. Journey mapping integrates these micro-tactics into the bigger picture.

The payoff: doctors feel respected, not generic. A cardiologist in Lucknow gets an invite in Hindi. A neurologist in Bangalore gets a portal link in English. Both feel seen. Both stay engaged.

Connecting Field Force Reality to Digital Data

Your field reps know doctors better than dashboards ever will. They know who skips webinars, who demands case studies, who prefers calls at odd hours. Journey mapping connects this human intel with digital behavior.

If the rep notes “Dr. Rao prefers WhatsApp summaries,” your digital engine respects that. If a webinar attendance shows “Dr. Kapoor asked about patient adherence,” your rep knows to follow up. That’s unified engagement. That’s execution.

Rethinking HCP Engagement Strategy Pharma

Moving Beyond Rep Visits and Emails

Traditional pharma engagement leaned on reps and email. Both still matter. But they’re insufficient alone. Doctors are busy, skeptical, and distracted. Your HCP engagement strategy pharma must be multi-layered.

Journey mapping helps sequence touchpoints intelligently. Instead of spamming emails, you time a WhatsApp nudge before a rep visit. Instead of random portal invites, you send content based on past interest. This is engagement that feels tailored, not transactional.

WhatsApp, Portals, Webinars; But in the Right Sequence

Channels aren’t equal. WhatsApp is quick, but shallow. Portals provide depth, but require intent. Webinars build authority, but demand time. The mistake is treating them interchangeably.

Journey mapping sequences them. WhatsApp starts the spark. Portal builds depth. Webinar cements credibility. Rep follows up with context. It’s a flow, not a scattershot.

Personalisation Without Violating Compliance

Doctors expect personalisation. They want relevant data, not generic brochures. But pharma marketers fear crossing compliance lines. Journey mapping balances this.

By tagging content blocks for specific use cases, you can deliver tailored experiences without breaching rules. Dr. Singh gets oncology updates. Dr. Patel gets cardiology trials. Both within compliance, both feeling personalised.

What Execution Looks Like When Done Right

Journey Mapping as a Boardroom Issue, Not a Marketing Task

This is not a marketing side-project. Journey mapping should sit at board level. Why? Because it impacts revenue directly. It determines how marketing spend converts into prescription lift. It decides how compliant your company stays. It shapes brand reputation with doctors.

When boards see mapped engagement dashboards- showing drop-offs, hot spots, compliance scores- they see marketing as a strategic lever, not a cost centre.

How to Prove ROI Without Guesswork

ROI without mapping is guesswork. ROI with mapping is evidence. You can show:

- X% of HCPs moved from awareness to engagement in Q2.

- Y% progression in Tier-2 cities after WhatsApp pilots.

- Z% compliance adherence with mapped audit trails.

These numbers matter in boardrooms. They move marketing from “we think” to “we know.”

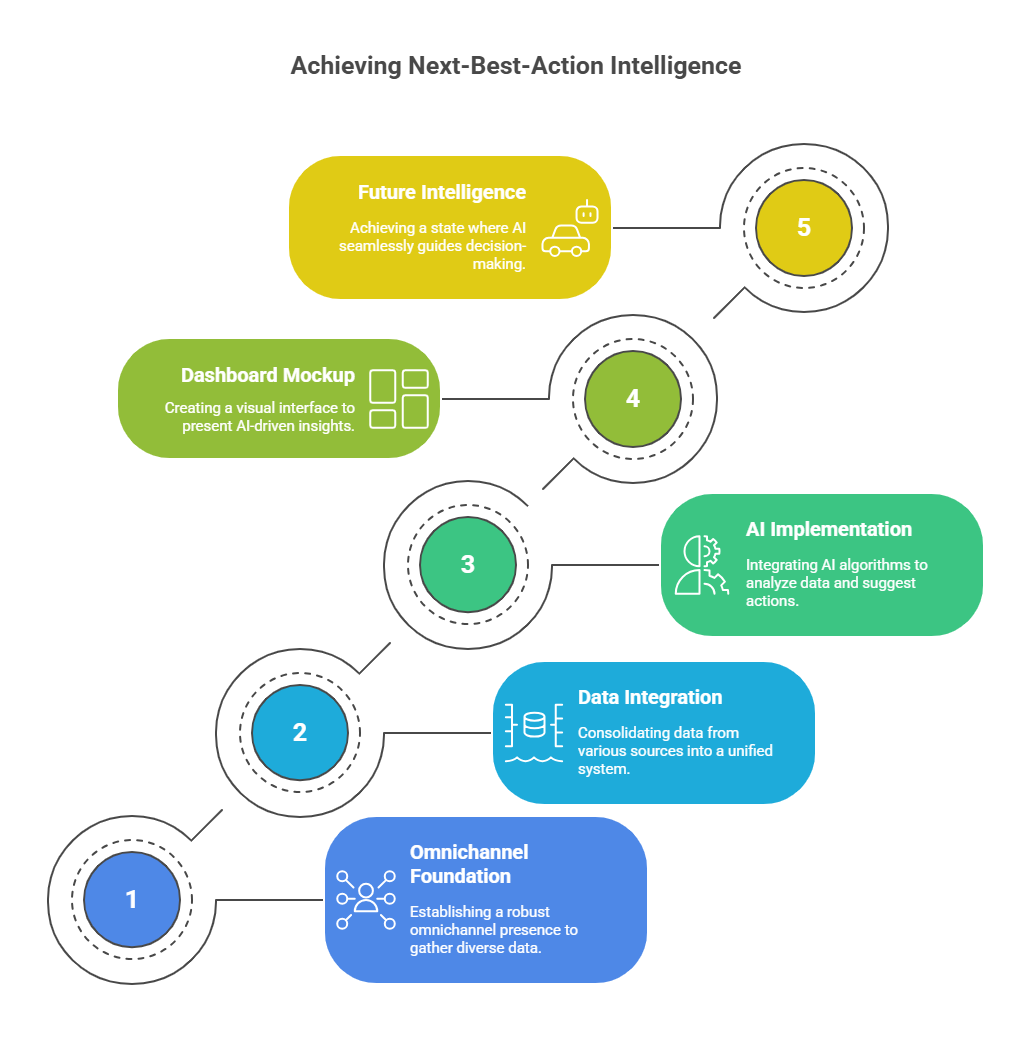

The Shift From Tools to Systems That Scale

Buying tools is easy. Building systems is hard. Tools give you dashboards. Systems give you results. HCP journey mapping is not another tool; it’s the architecture for how tools work together.

When you build systems, campaigns stop being one-offs. They become repeatable, measurable, and scalable. That’s how you grow beyond experiments.

Conclusion

Here’s the truth. Omnichannel without mapping is a mirage. It looks shiny but delivers nothing. HCP journey mapping is the foundation that makes everything else work: engagement, compliance, and ROI.

Indian pharma cannot afford to ignore this. Tier-1 doctors demand sophistication. Tier-2 doctors live on WhatsApp. Regulators demand proof. Boards demand returns. Only a unified HCP journey India ties this together.

If your team is struggling, it’s not because they lack effort. It’s because they lack a map. Start with mapping. Execution becomes faster. Compliance becomes smoother. Results become measurable.

Doctors are done with fluff. They need clarity. Boards are done with excuses. They need ROI. Regulators are done with chaos. They need order. HCP journey mapping gives all three.

If you’re serious about fixing your marketing foundation, start by mapping your HCP journeys. Book a working session. We’ll review your channels, stack, and compliance flow, and show you where to begin. The sooner you map, the faster you win.

Frequently Asked Questions:

1. What is HCP journey?

An HCP journey is the complete record of how a healthcare professional interacts with a brand across channels, from rep visits to webinars, portals, and WhatsApp, forming one connected experience.

2. What are the 7 steps to map the customer journey?

The seven steps are: define goals, build personas, list touchpoints, gather data, create journey stages, identify gaps, and refine the flow into a clear map for better engagement and measurable outcomes.

3. What are the 4 stages of journey mapping?

The four stages are awareness, consideration, engagement, and action. Mapping these helps brands understand where HCPs are in their decision path and how to guide them forward effectively.

4. What is meant by journey mapping?

Journey mapping is the process of visualising every touchpoint in a customer or HCP’s experience with a brand. It helps reveal gaps, align teams, ensure compliance, and build measurable engagement strategies.

5. What does HCP do?

An HCP, or healthcare professional, diagnoses, prescribes, and treats patients. In pharma marketing, HCPs are the primary audience for compliant communication, education, and engagement strategies.