The global cloud computing market size is expected to grow from $371.4 billion in 2020 to $832.1 billion by 2025, at a Compound Annual Growth Rate (CAGR) of 17.5% during the forecast period, according to the latest report by MarketsandMarkets. The increasing adoption of cloud computing technologies by businesses to streamline their operations and reduce costs is driving this growth.

Cloud engineering is rapidly evolving to keep up with new technologies and emerging trends. From the rise of serverless computing to the increasing importance of cybersecurity, businesses must adapt to stay ahead of the curve.

In this article, we'll explore the future of cloud engineering and the emerging trends and technologies to watch. This article will provide valuable insights into the challenges and opportunities that lie ahead for cloud engineers. So let's dive in!

The Future of Cloud Engineering: Why Does It Matter to Your Business?

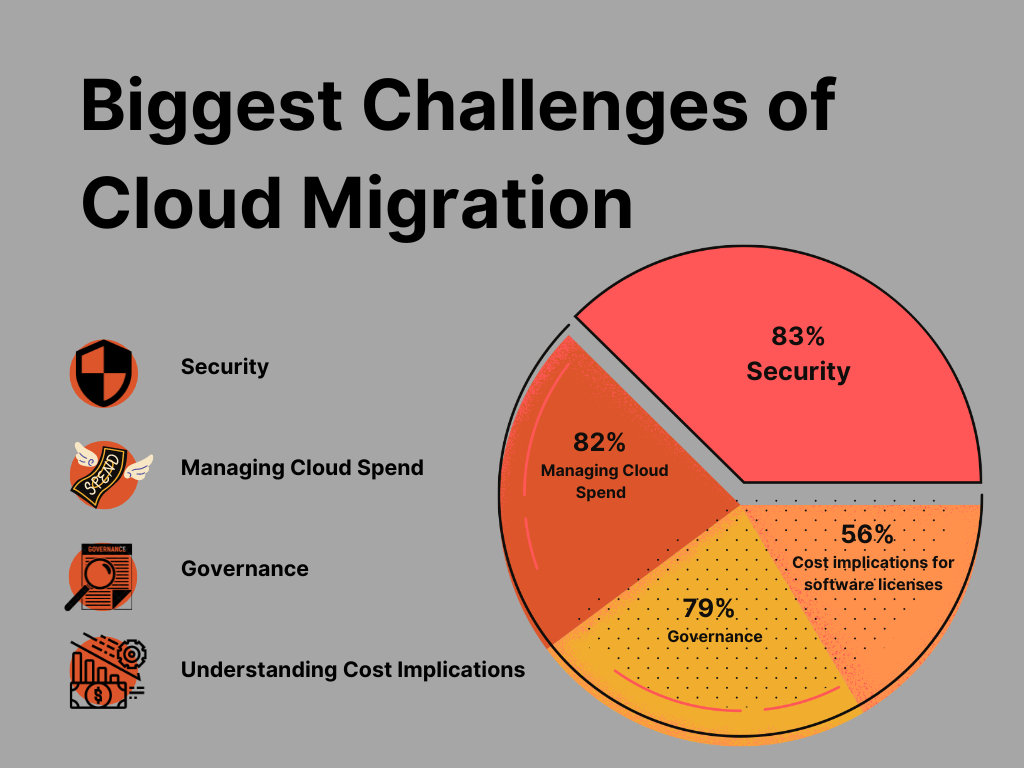

As the cloud landscape evolves, so too do the challenges faced by cloud engineers. From the explosion of data to the rise of edge computing and the increasing demand for real-time analytics, cloud engineers must adapt to new technologies and emerging trends to keep up with the ever-changing landscape.

Furthermore, with the growing concerns around cybersecurity, compliance, and data privacy, businesses are increasingly relying on cloud engineers to ensure that their cloud operations are secure, compliant, and up to date.

Given the complexity and rapid evolution of cloud engineering, it is becoming increasingly challenging for businesses to keep up with the latest trends, technologies, and best practices in cloud engineering. As a result, many businesses are struggling to optimize their cloud operations, mitigate risks, and drive innovation and growth.

Therefore, in this article, we help businesses stay ahead of the curve by providing insights into the future of cloud engineering, and the emerging trends and technologies to watch for managing cloud operations in a rapidly changing environment.

Here’s why your business should watch out for these trends-

- Stay ahead of the competition: By understanding the emerging trends and technologies in cloud engineering, C-suite executives can make informed decisions about their cloud strategy and gain a competitive advantage in their industry.

- Ensure cost-effective cloud operations: C-suite executives can learn about the latest cost-effective practices in cloud engineering and identify areas where they can reduce expenses while maintaining or improving the quality of their cloud services.

- Mitigate risks and ensure compliance: Cybersecurity threats, compliance regulations, and data privacy concerns are just a few of the challenges that businesses face when managing their cloud environments. By staying up-to-date one can better understand these risks and ensure that their cloud operations are secure, compliant, and up to date.

- Drive innovation and business growth: By leveraging emerging technologies and best practices in cloud engineering, businesses can unlock new opportunities for growth and differentiation in their industry.

Emerging Trends and Technologies to Watch Out for in Cloud Engineering in 2023 and Beyond

Serverless Computing: Also known as Function-as-a-Service (FaaS), it is an emerging trend in cloud engineering that allows developers to build and run applications without worrying about the underlying infrastructure. With serverless computing, developers can focus on building and deploying code quickly, without the need for managing servers, scaling, or provisioning.

One example of a successful use case for serverless computing is the mobile app development platform, Glide. Glide allows users to build mobile apps without writing any code, using serverless computing to handle the backend processing. It uses AWS Lambda, AWS API Gateway, and AWS S3 to process user requests and store app data, allowing them to scale up or down based on user demand.

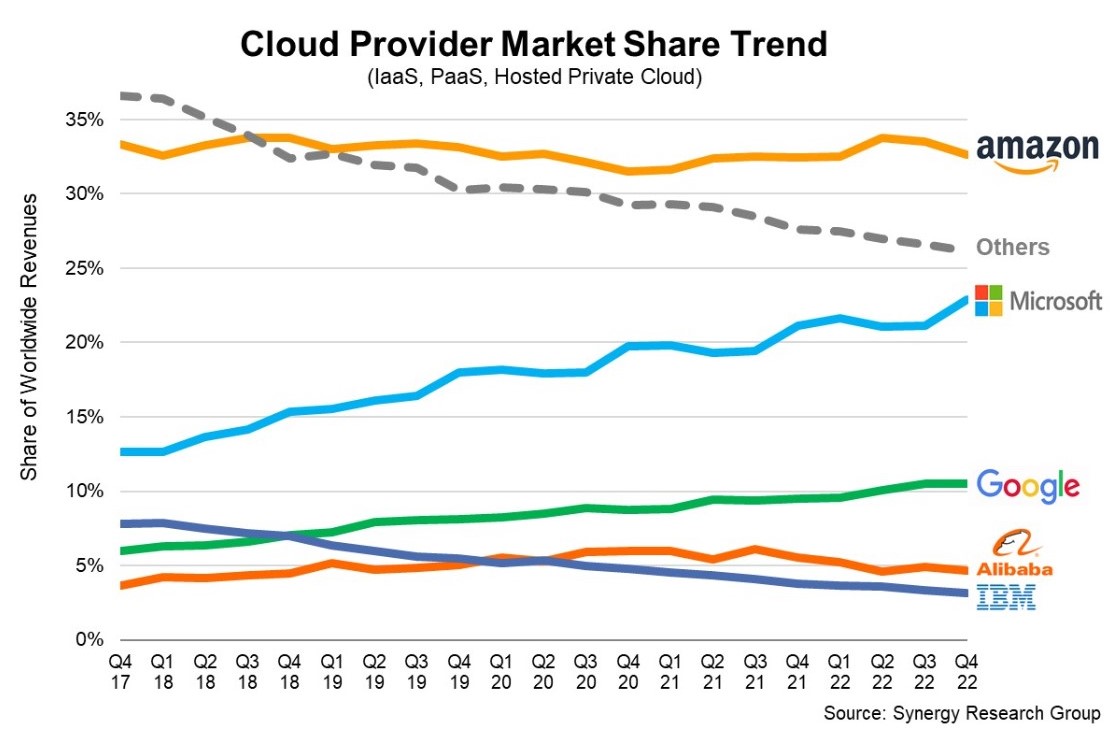

Multi-Cloud Strategies: These involve using multiple cloud platforms to achieve a specific business outcome. This approach provides greater flexibility, scalability, and redundancy than using a single cloud provider. In 2023, multi-cloud strategies are expected to gain more traction as businesses seek to reduce vendor lock-in, optimize costs, and improve performance.

Netflix uses multiple cloud providers, including AWS, Google Cloud Platform, and Microsoft Azure. By using multiple cloud providers, Netflix can optimize costs, avoid vendor lock-in, and improve service reliability.

Edge Computing: This is a distributed computing paradigm that brings computation and data storage closer to the devices and sensors that generate the data. This approach reduces the latency and bandwidth requirements of cloud computing and enables real-time data processing and analysis.

Vynca, for example, uses edge computing to power its end-of-life planning platform, which allows patients to document their end-of-life preferences and share them with their healthcare providers. By using edge computing, Vynca can process patient data in real-time, thus reducing latency and ensuring that critical patient data is always available.

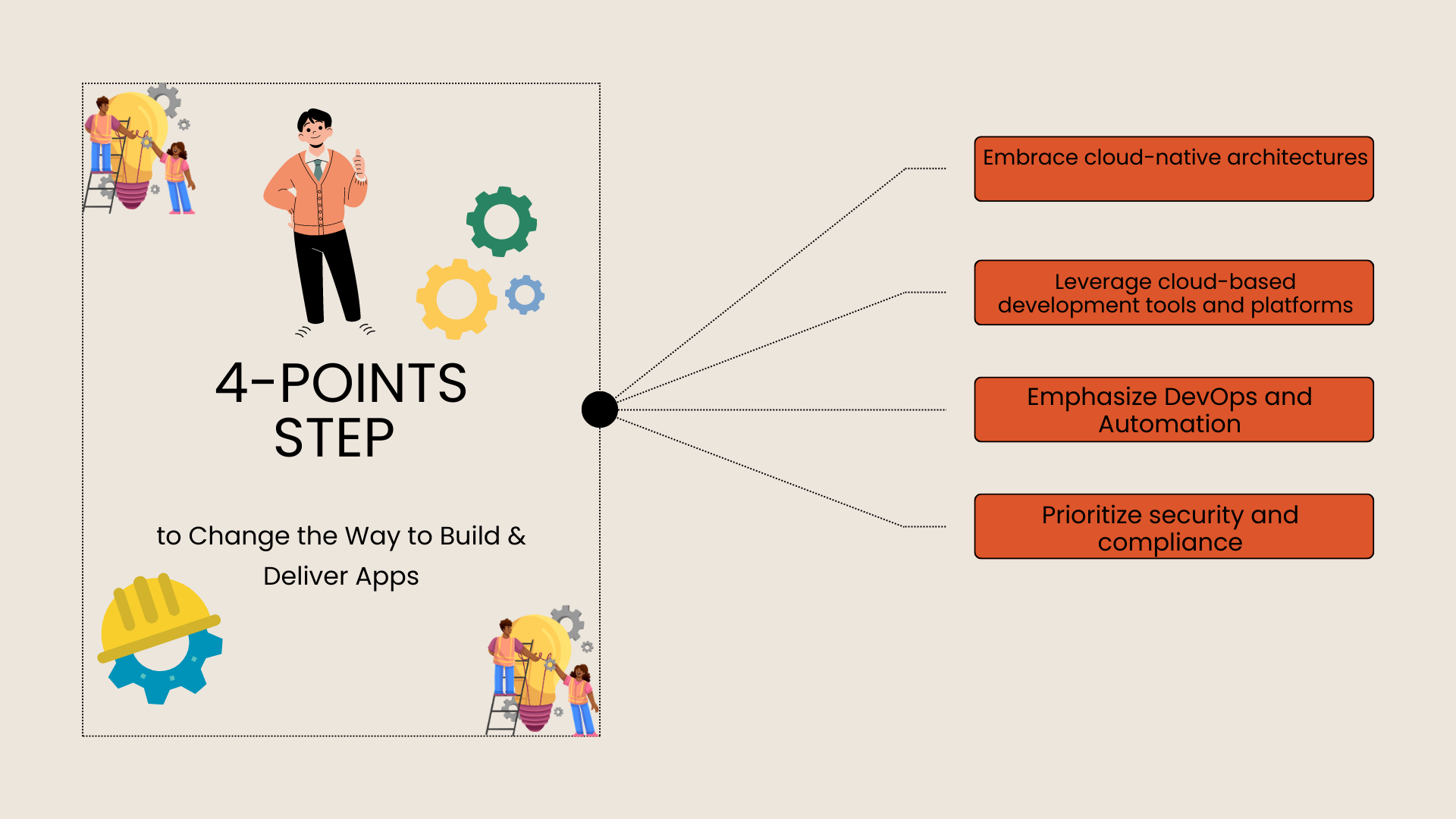

Cloud-Native Technologies: These technologies are designed to run natively on cloud platforms and leverage the cloud's scalability, elasticity, and resilience. Cloud-Native technologies include containerization, Kubernetes orchestration, and microservices architecture. In 2023, cloud-native technologies are expected to become more mainstream as businesses seek to modernize their existing applications and build new ones on the cloud.

Zoom is a successful use case for this. It uses containerization and Kubernetes orchestration to run its video conferencing service on the cloud, allowing them to scale up or down based on user demand, which allows it to optimize costs, improve performance, and deliver a seamless user experience.

Artificial Intelligence and Machine Learning: AI and ML are increasingly being used in cloud engineering to automate tasks, improve accuracy, and drive innovation. In 2023, AI and ML are expected to play a more significant role in cloud engineering, with the emergence of new AI-powered cloud services, such as intelligent automation, cognitive services, and predictive analytics.

The online retailer, Wayfair uses ML algorithms to personalize their website and mobile app experiences for each user, based on their browsing and purchase history to improve customer engagement, increase conversions, and drive revenue growth.

By keeping an eye on these emerging trends and technologies, businesses can stay ahead of the curve in cloud engineering and leverage the latest advancements to optimize their cloud operations, mitigate risks, and drive innovation and growth.

Recent Use Cases of Positive ROI with Cloud Engineering Technology

A Nucleus Research study conducted in 2017 found that the companies that use cloud-based technologies see an average return of $9.48 for every $1 spent on cloud technology.

The worldwide spending on public cloud services reached $332.3 billion in 2021, an increase of 23.1% from the previous year, according to a recent report by Gartner. This suggests that many businesses are continuing to invest in cloud technologies and may be seeing positive returns on their investment.

- Netflix: By migrating its infrastructure to Amazon Web Services (AWS), Netflix was able to reduce its costs by up to 50%, while also improving its scalability and reliability. This allowed Netflix to invest more in content creation and enhance its customer experience, ultimately driving growth and success.

- Intuit: Intuit, the maker of TurboTax and QuickBooks, used cloud engineering technology to improve its product development processes. By migrating its development and test environments to the cloud, Intuit was able to reduce its time to market by 50%, while also reducing costs and improving agility. This allowed Intuit to stay competitive in a fast-moving market and better serve its customers.

- Airbnb: Airbnb has also been a leader in leveraging cloud engineering technology to scale its business. By using AWS, Airbnb was able to quickly scale its infrastructure to meet demand during peak travel seasons, while also improving its performance and reliability. This allowed Airbnb to provide a seamless customer experience and ultimately drive growth and success.

These success stories are a testimony of the fact that by embracing the future of cloud engineering, businesses can not only optimize their cloud operations, reduce costs, improve performance, and enhance customer experiences, but also achieve a significant return on investment (ROI) and reduced time-to-market (TTM).

Power-Through into the Future of Cloud Engineering with Valuebound

If you are looking to leverage the latest trends and technologies in cloud engineering to drive growth and success, Valuebound can help. As an AWS partner for advanced tier services, Valuebound offers a range of AWS services offerings that can help you optimize your cloud operations, reduce costs, improve performance, and enhance customer experiences.

Whether you are just starting out on your cloud journey or looking to optimize your existing cloud infrastructure, Valuebound can provide the expertise and support you need to achieve your goals. So why wait? Contact Valuebound today to learn more about how we can help you harness the power of the cloud and achieve your business objectives.